|

I am currently a Robotics Master's student at Worcester Polytechnic Institute graduating in May 2024. I have had the opportunity to be a part of multiple projects affiliated to VISLab robotics lab at WPI. I work at the intersection of Perception, Deep learning, and embedded systems. Email Resume GitHub |

|

|

|

- Programming: Python, C++, C, Matlab, Arduino, HTML, BASH

- Frameworks: PyTorch, TensorFlow, ONNX, CUDA, Open3D, NumPy, ROS, ROS2, Gazebo, Linux, Git, Docker, Flask

- DL Architectures: VGG16, NeRF, CompletionFormer, RangeNet, Segformer, Mask R-CNN, Transformers, LSTM

|

|

|

|

August 2022 - May 2024 (Expected) |

|

|

GPA : 4.0/4.0 |

- Teaching Assistant for MA 1022 : Calculus II (Fall 2022)

- Teaching Assistant for MA 1024 : Calculus IV (Spring 2023)

| Research Experience : Findability Sciences | January 2024 - Ongoing |

- Developing an LLM-based conversational interface for business users to request database records and industry reports.

- Fine-tuning foundational large language models like Llama using market forecasts and real estate-related analyst reports.

- Working on Retrieval Augmented Generation (RAG), SQL Generation and Large Language Model (LLM) optimization.

| Research Experience : VISLab | May 2023 - August 2023 |

- Implementated PointAttN: a Tranformer Network for Point Cloud Completion.

- Experimented with the Geometric Details Perceptron (GDP) and Self Feature Augment (SFA) blocks in encoder.

- Network paid attention to the relationsships between points without dividing shapes into smaller regions.

- Implemented cross layer information integration in the PointAttN Network and enhanced the baseline results by 20%.

|

|

|

|

September 2023 - Ongoing |

|

|

- Working on Sentry bot to create high-quality depth maps through sensor fusion, lidar-camera calibration and classical computer vision techniques.

- Developing software for real-time data integration from Velodyne’s LiDAR and a pair of Long Range RGB cameras.

- Enhancing depth estimation CNN architecture aiming to predict the probability of terrain traversability accuarately.

|

|

May 2023 - August 2023 |

|

|

- Worked on ZED2/GNSS Odometry Fusion to get an accurate position of the voidwalking bot. The final odometry integrated RTK GPS + Visual Odometry + IMU. Enabled the bot to walk GPS and ZED2 within an accuracy of 1cm.

- Autumated test cases in ROS2 for line rendering in RVIZ via calling the sevice through rqt.

- Constructed a Docker-integrated ROS package for SLAM on the environment resulting in a 15% productivity boost.

|

|

May 2020 - Nov 2020 |

|

|

- Developed a deep learning pipeline for detection of O-ring in Camshaft and classification of Camshaft based on presence/absense of O-ring. Project deployed on the production line of Bajaj Auto Pvt. Ltd. Pune for inspection purposes.

- Augmented Reality using OpenCV to augment the blind spot at welding station for same manufacturing plant.

- Prototyped and proposed VR Lab to AIM Laboratory of WPI for experimentation in Biomedical Robotics Research.

|

|

|

Fall 2023 Report: Link Code: GitHub Optimizing a NeLF (Neural Light Field) based novel view synthesis, with techniques such as model pruning and quantization, and

deployed it on iPhone, demonstrating proficiency in on-device deep learning for real-time 3D novel view synthesis.

|

|

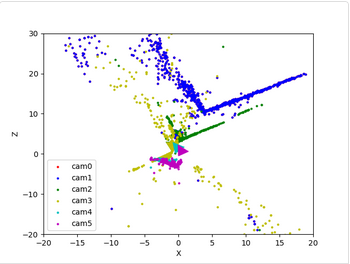

Spring 2023 Code: GitHub In this project, sensor fusion of LiDAR and camera was carried out on the Kitti dataset to obtain painted pointcloud. |

|

Fall 2022 Report: Link Code: GitHub Panoptic segmentation was done on SemanticKITTI dataset (3D LiDAR Point Cloud Data) which combines the outputs of semantic segmentation and instance

segmentation for Deep Learning under Prof. Fabricio Murai.

|

|

Spring 2023 Report: Link Code: GitHub In this project, I have planned path traversal for non-holonomic robots by state-of-the-art algorithms.

|

|

Spring 2023 P&Q: Link NAS: Link DNI: Link Code: GitHub Code: GitHub These projects include Neural Networks Design from Scratch, Neural Machine Translation.

|

|

Summer 2023 Code: Github Simultaneously reconstructed 3D scene Mapping and extracted camera pose Localization from given stereo camera

correspondences using Non-Linear triangulation, Non-Linear PnP and Bundle Adjustment (BA) pipeline.

|

|

Spring 2023 Code: GitHub

In this project, Detected edges in image using probability based boundary detection using K-means clustering of Oriented DoG (ODoG),

Leung-Malik (LM) and Gabor Filter bank responses. Outperformed the results from classical canny and sobel filters. |

|

Spring 2023 Code: GitHub

In this project, I implemented research work presented by Zhengyou Zhang for Calibrating a Camera which is one of the hallmark papers in

camera calibration. Estimated parameters of the camera like the focal length, distortion coefficients and principle point.

|

|

Spring 2023 Report: Link Code: Github

In this project, we Implemented discrete action space algorithms such as DQN, DQN-MR and DQN-PER on OpenAI Gym’s Third party

environment, Highway-env. Compared training time and accuracy to discover DQN-PER has the best performance.

|

|

Spring 2023 Code: GitHub Designed and deployed Sliding Mode Controllers for trajectory tracking for micro UAVs, with an acceptable error range of 1% while countering environmental noise. Generated a fifth-order trajectory with an accuracy of 0.03% for path planning. |

|

Spring 2023 Code: GitHub This project implements various search based planners (Dijsktra, A star, D star) and sampling-based planners(RRT, RRT star and Informed RRT star).The planners are implemented on a 2d map. |

|

Spring 2023 Code: GitHub These projects include implementation of Epsilon-Greedy Algorithm, Dynamic Programming, Monte Carlo, Temporal Difference Learning, Model-based RL |

|

Fall 2022 Code: GitHub

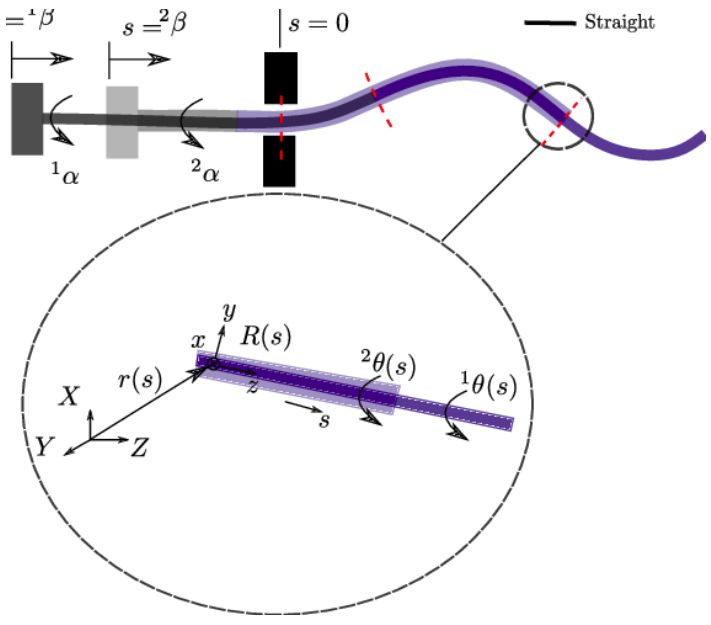

In this project, I reconstructed informed RRT algorithm for path planning of continuum robots which are needle sized manipulators used in

image-guided surgical procedures resulted in accurately generating optimal and feasible path in a dynamic environment..

|

|

|

Fall 2022 Code: GitHub Implemented YOLO pipeline from scratch to do object detection on street scene images. |

|

|

|

|

August 2016 - October 2020 |

|

|

GPA : 9.32/10.0 |

|

Great thanks to Jon for his amazing website template! |